Reinforcement Learning Problem Formulation

- Markov Chain (MC)

- Define (fully observable) states and memoryless (i.e., Markovian) state transitions

- Markov Reward Process (MRP)

- Indicate preferences through a reward function

- Markov Decision Process (MDP)

- Include decisions that can be controlled by an agent's policy

- Partial Observable MDP (POMDP)

- Deal with situations where the true state information isn't available

Markov Chain (MC)

- Markov Property: the current state determines the state transition probability, regardless the history (i.e., memoryless).

- The state transition can be deterministic or stochastic.

- States:

- State transition function:

- States:

Markov Chain, from Wikipedia.

Markov Reward Process (MRP)

- Indicate preferences through reward signals.

- States:

- State transition function:

- Reward function:

- rewards can be defined for each state transition (i.e., edges)

- or can be defined for each state (i.e., vertices)

- States:

Markov Decision Process (MDP)

- Include decisions that are controlled by an agent's policy.

- Involves an agent with its policy

- The agent's goal is to update its policy so as to maximize the total reward (return).

- States:

- Actions:

- Note that

- Note that

- State transition function:

- Reward function:

- rewards can be defined for each state transition (i.e., edges)

- or can be defined for each state (i.e., vertices)

- States:

Markov Decision Process, from Wikipedia.

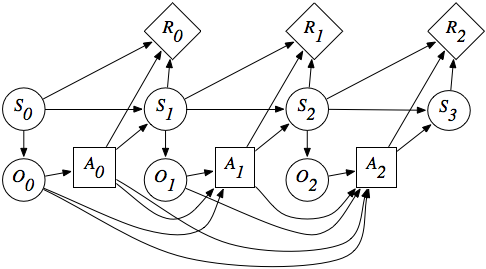

Partial Observable MDP (POMDP)

- Include an observation function

- Agent does not observe the full state features, but can utilize either:

- the current observation

- the observation history

- the action-observation history

- the current observation

- The true state may be approximated by observations based on frame stacking, RNNs, transformers, etc.

SideNote: Markov Chains vs. Markov Decision Processes is analogous to Hidden Markov models (HMM) vs. POMDPs. 1

POMDP, from Section 9.5.6 of Artificial Intelligence: Foundations of Computational Agents.

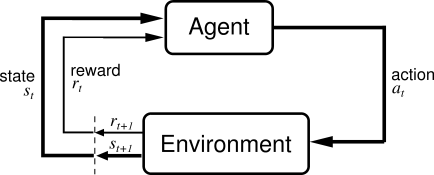

The Agent-Environment Interface

- Training Phase of (Online) RL

- Agent collects experiences/data

- The state transitions & rewards can only be observed through sampling.

- Agent collects experiences/data

- Testing Phase of (Online) RL

- Agent applies the best learned policy.

The Agent-Environment Interface, from Section 3.1 of Reinforcement Learning: An Introduction.

Examples

Formulating tasks as MDPs for RL. Note that these are just minimal examples, where the actual formulation can be much more complicated.

1. Classification

- Goal: Maximize accuracy.

- State: Input Image

- Action:

DogorCat. - Reward:

2. Shortest Path

- Goal: Minimize total distance.

- State: Current vertex.

- Action: Move along an edge.

- Reward:

3. Console Game

- Goal: Maximize total score.

- State: Current game screen.

- Action: Game controls (Up, down, left, right, jump, etc.)

- Reward: Immediate score.

4. Robot Control

- Goal: Control robot to perform tasks.

- State: Joint angles, camera images, etc.

- Action: Target joint angles, etc.

- Reward:

-

See the topic on Markov Chain on Wikipedia. ↩