Diffusion Q-Learning (Diffusion-QL)

Goal: Explore the use of diffusion models to model multi-modal policy distributions in the offline RL domain.

Contribution: Advance the performance on the D4RL benchmark.

Motivation

To prevent generating out-of-distribution actions in offline RL, the literature generally address it by one of the following:

- regularizing how far the policy can deviate from the behavior policy

- constraining the learned value function to assign low values to out-of-distribution actions

- introducing model-based methods, which learn a model of the environment dynamics and perform pessimistic planning in the learned Markov decision process (MDP)

- treating offline RL as a problem of sequence prediction with return guidance

This work falls in the first category, where its target consist of a behavior-cloning term and a policy improvement term (based on Q-value).

Typically, methods in the first category performs slightly worse than those in the other categories. The underperformance is due to two main reasons:

- policy classes are not expressive enough

many methods utilize diagonal Gaussian policies to model multi-modal policies. - regularization methods are improper

Many methods minimize KL-divergence, which may result in mode-covering behavior.

KL-divergence targets require access to explicit density values and MMD targets needs multiple action samples at each state for optimization. Therefore, these targets require an extra step to model the behavior policy of the data, which may further introduce approximation errors.

Concept

The actions are denoted as

-

Q-value function loss:

-

Policy is defined as

Please note that the gradient of the Q-value function with respect to the action is backpropagated through the whole diffusion chain.

Diffusion-QL does not explicitly clone the behavioral policy, but implicitly regularized the distance between it with

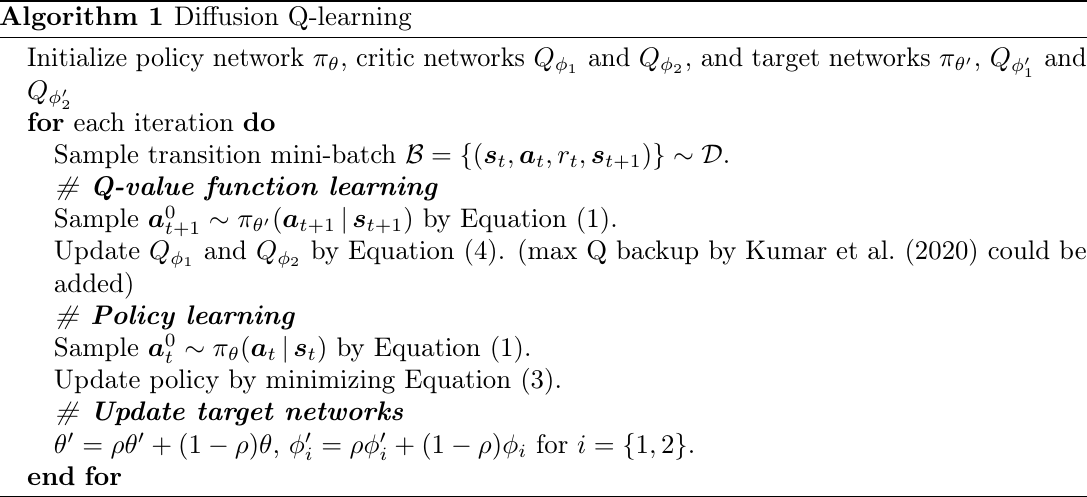

The full algorithm is shown as follows:

from Algorithm 1 of Wang et al., 2022.

where:

- Equation (1) is the reverse diffusion process of DDPM

- Equation (3) is

- Equation (4) is the Q-value function loss

Difference with Diffuser

Let a trajectory

Diffuser fit a diffusion model to the entire trajectory (i.e., state-action pairs)

The model generates a full trajectory for each timestep when used online, which is computationally inefficient. i.e., for each step perform:

- (re-)sample an entire trajectory based on all previous states and actions

- applies the first action (and record it)

- discard the generated trajectory in step (1)

Diffusion-QL models

Experiments

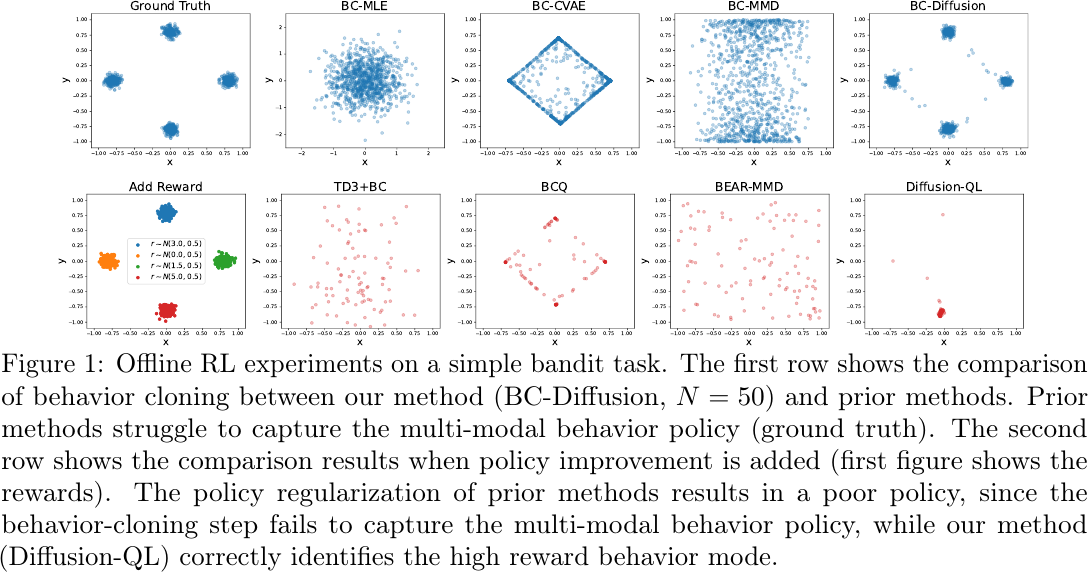

Simple Bandit Task

The bandit task has strong multi-modality.

Offline RL experiments on a simple bandit task, from Figure 1 of Wang et al., 2022.

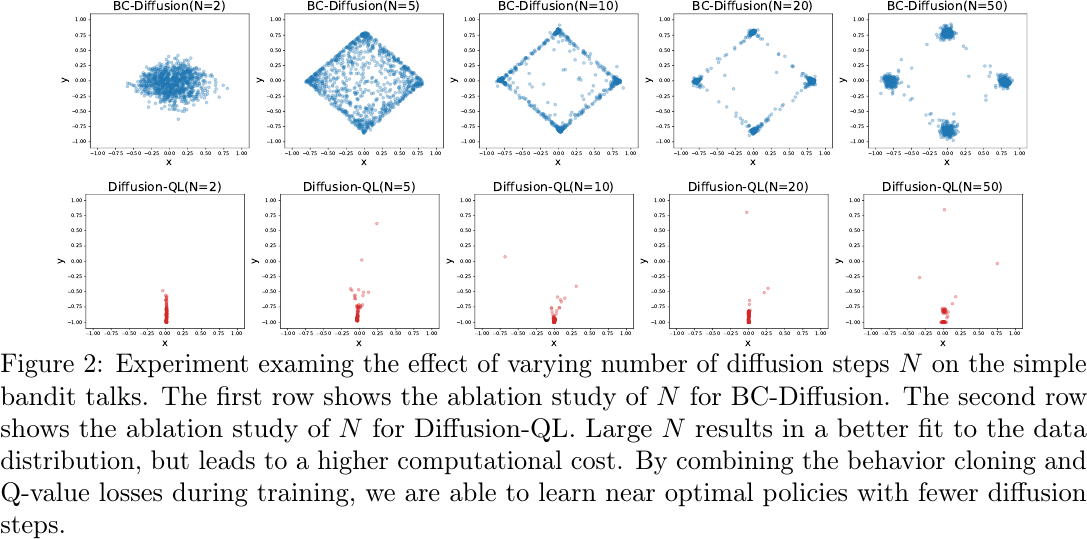

Experiment examing the effect of varying number of diffusion steps

The authors use

Benchmark on D4RL

Outperform the baselines on most of the D4RL tasks.

Official Resources

- [ICLR 2023] Diffusion Policies as an Expressive Policy Class for Offline Reinforcement Learning [arxiv][paper][code] (citations: 16, 23, as of 2023-04-28)