Autoregressive Models (AR/ARM)

Motivation: Factorizing the joint distribution into conditional 1D distributions to circumvent the need for modeling the (computationally expensive) joint distributions.

For example, consider a scenario involving

Parameterization

Factorize the joint distribution to conditional 1D distributions by chain rule of probability:

where

Connection to RNNs

The condition of each term

- (first-order) Markov assumption:

- Hidden Markov model (HMM):

- Under the special case that

- Under the special case that

The conditionals

- Discrete distributions

- Categorical distributions: parameterized by

- Categorical distributions: parameterized by

- Continuous distributions

- (Mixture of) Gaussians: parameterized by

- Cumulative distributions: autoregressive implicit quantile networks (AIQN), and etc.

- (Mixture of) Gaussians: parameterized by

In practice, while we only define the model architecture of

Pointwise Evaluation

-

Pointwise evaluation of

- Yes, as long as

- Simply compute

- Yes, as long as

Data Generation

-

Generating new samples

- Yes, as long as

- Sample each dimension autoregressively

- Yes, as long as

-

Conditional generation

- Yes, simply define

- Yes, simply define

-

Imputation

Training Target

We need to derive

The Negative Log-Likelihood (NLL) loss function is defined as:

which can be optimized based on its gradients:

Examples

PixelCNNs

Visualization of conditional distributions, from Figure 2 of Oord et al., 2016.

![]()

- For images,

- The pixels of the image are ordered sequentially by concatenating the rows:

- The color channels are ordered sequentially by concatenating the RGB values:

- Every

- The pixels of the image are ordered sequentially by concatenating the rows:

- Code implementation:

- Output as categorical distribution: See the

lowandhighparameters in the TensorFlow implementation, or thePixelCNN(pl.LightningModule)module in phlippe/uvadlc_notebooks.- The output of the model lies in

- The output of the model lies in

- Output as binary categorical distribution: When the data only contains black-and-white images, the output shape can be the same as the input shape to save parameters, as in the Keras implementation or in EugenHotaj/pytorch-generative.

- Please note that this technique only works under the special case where the data contains binary values.

- Sampling: See the

def samplefunction in phlippe/uvadlc_notebooks.

- Output as categorical distribution: See the

(Generative) Transformers

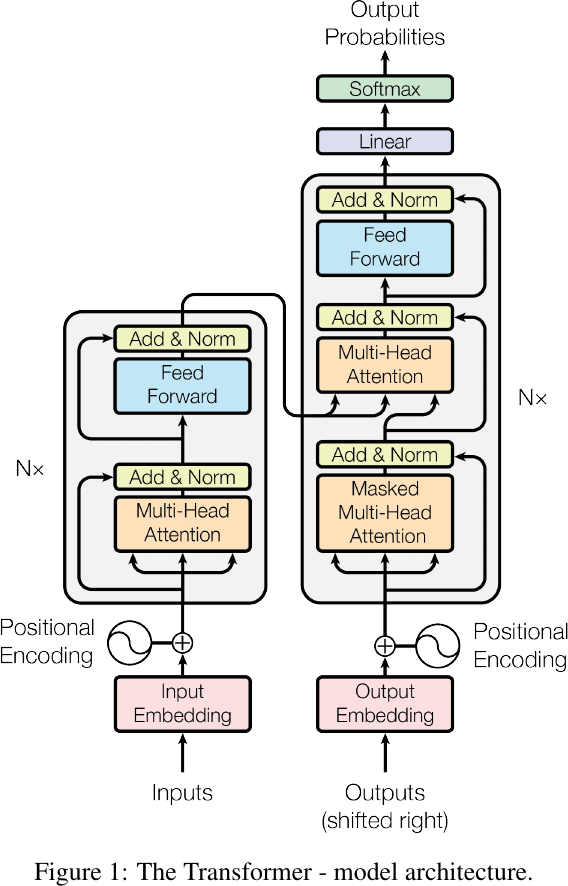

The Transformer model architecture, from Figure 1 of Ashish Vaswani et al., 2017.

- For natural language processing,

- Every

- The vocabulary size depends on the tokenizer used. For example, the GPT-2 tokenizer has a vocabulary size of 50,257.

- Every

- Code implementation:

- Dimensions of input

Embeddings(nn.Module)module in harvardnlp/annotated-transformer.- Please note that

nn.Transformerin PyTorch works in embedding space. An extrann.Embeddinglookup operator followed by aPositionalEncodingis required to map the input to embedding space.

- Please note that

- Categorical distribution: See the

Generator(nn.Module)module in harvardnlp/annotated-transformer.- Please note that

nn.Transformerin PyTorch works in embedding space. An extrann.Linearlayer and log-softmax operator is required to map the output from embedding space to the vocabulary distribution. - The output of the model lies in

- Please note that

- Sampling: See the

def greedy_decodepart in harvardnlp/annotated-transformer, or thefor i in range(args.words)part in pytorch/examples.

- Dimensions of input

- The overall inference process from an application viewpoint involves the following steps:

- The input text (character sequence) is tokenized into tokens (character/byte sequences), which are then mapped to token IDs (integers). These token IDs are further transformed into a sequence of embeddings (vector sequence).

- After that, the vector sequence is input into the Transformer model, producing another sequence of embeddings (vector sequence), which in turn are transformed into logits (number sequence).

- Lastly, the logits are normalized into a sequence of probabilities (number sequence) by the softmax operator, which can be used to sample the next token ID (integer) of the output sequence. This sampling process can be repeated to generate a sequence of token IDs (integer sequence), which can be mapped back to tokens (character/byte sequences), ultimating yielding the final output text (character sequence).

Community Resources

- Probabilistic Machine Learning: Advanced Topics (i.e., probml-book2)

- Chapter 22: Auto-regressive models