AlphaTensor

Goal: Discover faster (matrix multiplication) algorithms with RL.

Contribution: Discover SOTA algorithms for solving several math problems.

Concept

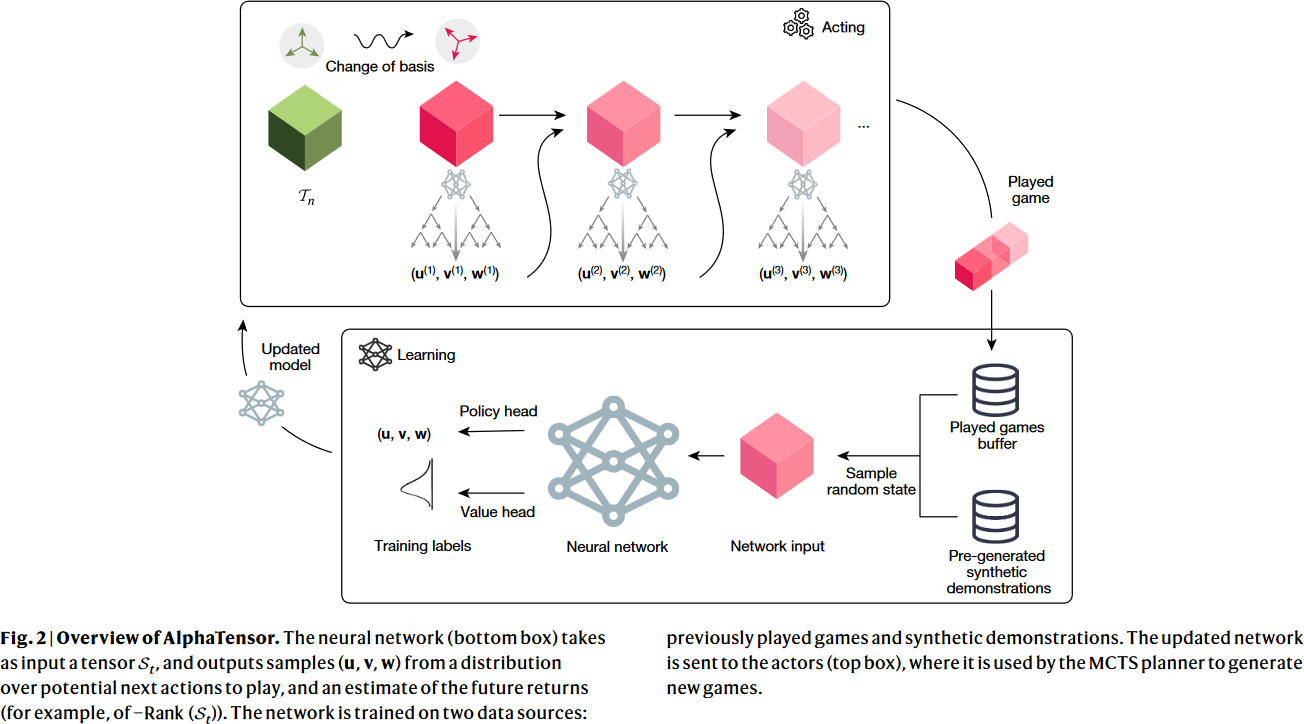

Overview of AlphaTensor, from DeepMind Blog.

Single-player game played by AlphaTensor, where the goal is to find a correct matrix multiplication algorithm. The state of the game is a cubic array of numbers (shown as grey for 0, blue for 1, and green for -1), representing the remaining work to be done.

-

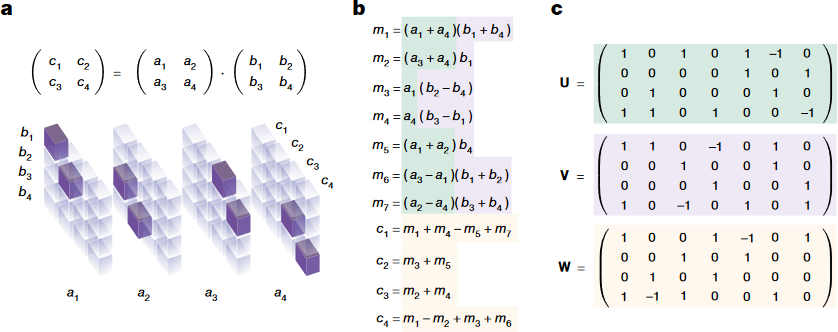

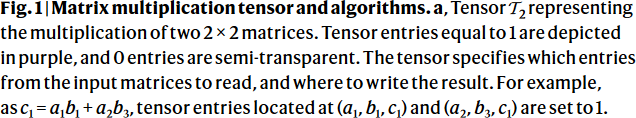

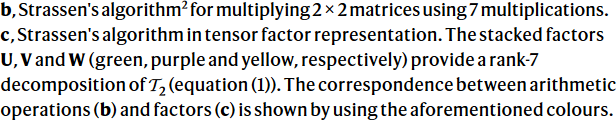

Formulate the matrix multiplication problem as a tensor decomposition problem.

This is discussed in previous works such as (Lim 2021, p.67, Example 3.9).-

Represent a matrix multiplication algorithm as a tensor.

A

-

Execute the matrix multiplication algorithm base on the decomposition of the tensor.

from Algorithm 1 of Fawzi et al., 2022.

Example: The tensor and its decomposition of Strassen's algorithm (

Matrix multiplication tensor and algorithms, from Fig.1 of Fawzi et al., 2022.

Please note that the (column) vectors

- The elements

- The elements

- The elements

- The elements

-

-

Formulate the tensor decomposition problem as a game named TensorGame.

- State:

- Action:

- Terminate Condition: when

- Reward:

- State:

-

Solve the TensorGame with DRL and MCTS.

- Network Architecture:

- Torso: Modified transformer architecture.

- Policy head: Transformer architecture.

- Value head: 4-layer MLP that outputs estimation of certain quantiles.

- Demonstration Data: Random sample factors

- Objective: Quantile regression for value network

-

Input Augmentation: Apply random change of basis on

Overview of AlphaTensor, from Fig.2 of Fawzi et al., 2022.

Please refer to the paper for figures of the architecture.

- Network Architecture:

Results and Costs

- Results

- SOTA matrix multiplication algorithm for certain size of matrices.

- SOTA algorithm for multiplying

- Re-discover the Fourier basis.

- Training Costs

- A TPU v3 and TPU v4, takes a week to converge.

Official Resources

- [Nature 2022] Discovering faster matrix multiplication algorithms with reinforcement learning [paper][blog][code] (citations: 98, 78, as of 2023-04-28)